AI models generate texts, images and videos – and sometimes stray far from reality. Currently, systems like ChatGPT, DALL-E and Stable Diffusion impressively show what learning systems are capable of. Many people can interact with the models for the first time, try out scenarios and test use cases. “This step is particularly important and essential, especially for awareness building,” says Martin Boyer, project leader and senior research engineer at AIT Austrian Institute of Technology, Center for Digital Safety and Security. “Also, these developments make us realize how important it is to always critically question info on the net.”

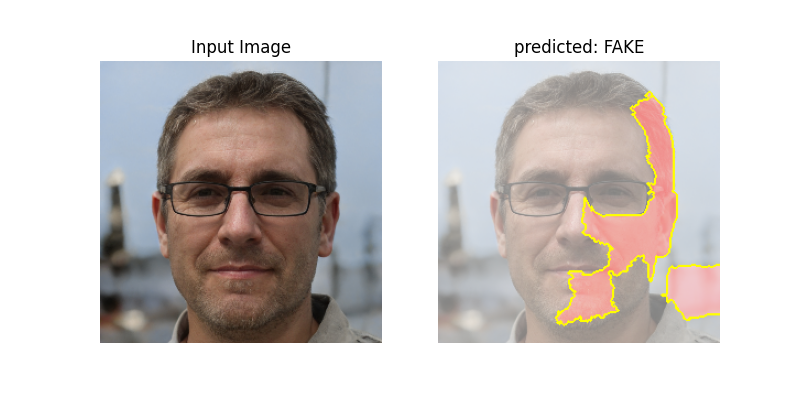

But verifying content is becoming increasingly difficult. After all, it is possible to forge content with the help of software, even without great expertise, and it can be fabricated on conventional computers. For example, in the case of so-called deep fakes: In videos, deceptively genuine statements are put into the mouths of primarily prominent people that they never made in this way. It is often almost impossible to detect the manipulation with the naked eye. To ensure that fact checks are nevertheless possible, AIT is developing media forensic assistance systems for the verification of images and videos – an important component in the GADMO project.

Manipulated files often show characteristic traces that can indicate forgery. Users of the systems then receive a hint, can conduct further research, and ultimately must decide whether to classify the content as manipulated. “Our tools are designed to support a person’s assessment,” says Martin Boyer. “Currently, for example, very high accuracy is already achieved in the recognition of artificially generated faces. Here, we already have very great difficulties with human assessment.” In addition, he says, the time factor also plays an important role: the more energy and expertise that has been put into a fake, the more difficult it is, as a rule, to detect it.

Artificial intelligence is used at several points in the development process of these tools. On the one hand, AI models learn from previous counterfeits which suspicious features they have in common – a central basis for the subsequent identification of new material. In addition, the AIT team uses generative models to create synthetic data sets for training their assistance systems. After all, none of the images generated by DALL-E or Stable Diffusion are real, making them ideal training material for media forensic AI systems.

In essence, however, it’s a race. One is using learning algorithms to artificially generate content, the other to identify those fakes. And as tampering technologies continue to evolve, detection tools must follow suit and be regularly updated or enhanced. “In addition, the detection of generated or altered content involves multiple analysis methods, the results of which must be presented together and in an understandable way,” says Martin Boyer.

Understandable in this case means that the forensic assistance systems must also point out the capabilities and limitations of the detection methods themselves in order to provide the basic knowledge needed for a sound evaluation of digital content. “We are developing AI tools to help people deal with the complexity of digitization,” says Martin Boyer. “Technology is an important vehicle to support media and government in their daily work.” In the GADMO project, researchers and fact checkers are jointly developing AIT tools to better address the specific challenges posed by disinformation campaigns. You will learn more about the results here later.