GADMO and the EDMO Ireland Hub have presented a detailed analysis on how major digital platforms deal with misinformation and disinformation. It shows: The platforms’ self-disclosures leave much to be desired. Overall, they do too little to curb the spread of false claims and disinformation.

The analysis specifically examines whether Google, Meta, Microsoft, TikTok and X/Twitter comply with the Code of Practice on Disinformation. For this purpose, a team of independent researchers analysed reports from the platform companies in detail.

The Code of Practice on Disinformation (CoP) is a self-regulatory framework initiated by the EU Commission and signed by the major technology companies (Google, Meta, Microsoft, TikTok), among others. It provides for numerous commitments that and how platforms should act against dis- and misinformation. These include, for example, transparency rules, the enforcement of community guidelines or the obligation to cooperate with fact-checkers and researchers. The first version of the CoP was published in 2018 and subsequently revised. The current version was published in 2022 and contains 128 concrete measures against dis- and misinformation. You can find the full text of the code here.

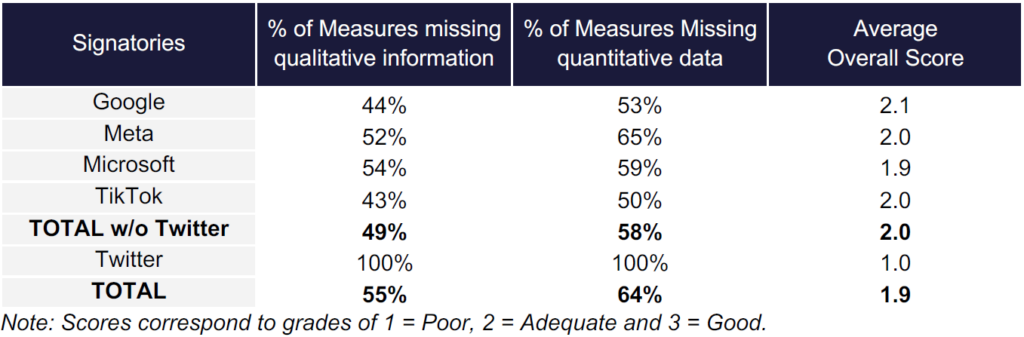

Using an assessment scheme and a three-step coding process, the researchers evaluated both qualitative and quantitative information from the platforms and assigned an overall score of 1 (poor), 2 (adequate) or 3 (good) for each measure.

Lack of information and data

The results show that the overall quality of platform reports is less than adequate. About half of the applicable measures had incomplete or missing qualitative information. Most importantly, quantitative data was missing in 58% of the cases.

The platforms scored particularly poorly in the chapter “Empowering the Research Community”. Cooperation with external, independent researchers should function better in the future in order to meet the CoP’s requirements. The analysis also identifies several shortcomings in the areas dealing with demonetising disinformation or countering manipulative techniques. For example, there is a lack of data on combating fake accounts and fake engagement.

Worst score for Twitter/X

X (formerly Twitter) was a signatory to the Code of Practice until a few months ago and accordingly also submitted a baseline report. However, this report lacks information and data on almost all commitment, so that X had to be given the worst possible rating.

The analysis of the platform reports shows how complex the monitoring of platform measures against disinformation is. In principle, monitoring can be carried out using two different approaches: Either it is only checked whether the reporting obligations are complied with. Or one also examines the accuracy and comprehensiveness of the information provided by the platforms. The latter approach would mean that, for example, the effective enforcement of community guidelines would have to be checked. How successful is Instagram, for example, in the fight against fake accounts? Or is Google’s ad network really able to detect and block disinformation in ads? Such questions arise, but answering them requires appropriate resources, expertise and access to data. However, this investigative approach is essential for meaningful monitoring.

Substantial doubts about the platforms’ claims

This CoP Monitor focuses primarily on the review of reporting requirements. However, in several instances the authors were able to identify substantial doubts about the information provided by the platforms. Take TikTok, for example: The company claims that many rules for political advertising do not apply to its own platforms. This is because political advertising is prohibited on TikTok. However, several studies show that this ban is not enforced consistently enough.

Therefore, one of the many recommendations made in the CoP Monitor is to expand and institutionalise platform monitoring. This is all the more important because the CoP could soon become a Code of Conduct under the Digital Services Act. The requirements of the code would then be quasi-binding for the platforms and, in the worst case, disregarding them could lead to heavy fines.

If you have any questions about the CoP Monitor or GADMO’s work in the area of platform monitoring, you can reach us at [email protected].